Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Snow Owl uses a number of thread pools for different types of operations. It is important that it is able to create new threads whenever needed. Make sure that the number of threads that the Snow Owl user can create is at least 4096.

This can be done by setting ulimit -u 4096 as root before starting Snow Owl, or by setting nproc to 4096 in /etc/security/limits.conf.

The package distributions when run as services under systemd will configure the number of threads for the Snow Owl process automatically. No additional configuration is required.

By default, Snow Owl is starting and connecting to an embedded Elasticsearch cluster available on http://localhost:9200. This cluster has only a single node and its discovery method is set to single-node, which means it is not able to connect to other Elasticsearch clusters and will be used exclusively by Snow Owl.

This single-node Elasticsearch cluster can easily serve Snow Owl in testing, evaluation and small authoring environments, but it is recommended to customize how Snow Owl connects to an Elasticsearch cluster in larger environments (especially when planning to scale with user demand).

You have two options to configure Elasticsearch used by Snow Owl.

The first option is to configure the underlying Elasticsearch instance by editing the configuration file elasticsearch.yml, which depending on your installation is available in the configuration directory (you can create the file, if it is not available, Snow Owl will pick it up during the next startup).

The second option is to configure Snow Owl to use a remote Elasticsearch cluster without the embedded instance. To use this feature you need to set the repository.index.clusterUrl configuration parameter to the remote address of your Elasticsearch cluster. When Snow Owl is configured to connect to a remote Elasticsearch cluster, it won't boot up the embedded instance, which reduces the memory requirements of Snow Owl.

This section describes the use case scenarios present in the world of SNOMED CT and how Snow Owl can be used in those scenarios to maximize its full potential. Each scenario comes with a summary and a pros/cons section to help your decision making process when selecting the appropriate scenario for your use case.

This section includes information on how to set up Snow Owl and get it running using standalone installation methods

Snow Owl is built using Java and requires at least Java 17 to run. Only Oracle’s Java and the OpenJDK are supported.

We recommend installing the latest version in the Java 17 release series. We recommend using a supported LTS version of Java.

The version of Java that Snow Owl will use can be configured by setting the JAVA_HOME environment variable.

Snow Owl uses a mmapfs directory by default to store its data. The default operating system limits on mmap counts is likely to be too low, which may result in out of memory exceptions.

On Linux, you can increase the limits by running the following command as root:

sysctl -w vm.max_map_count=262144To set this value permanently, update the vm.max_map_count setting in /etc/sysctl.conf. To verify after rebooting, run sysctl vm.max_map_count.

The RPM and Debian packages will configure this setting automatically. No further configuration is required.

Snow Owl is now running but does not contain any content whatsoever. To be able to import or author terminology data a resource has to be created beforehand. There are three major resource types in the system:

Code Systems (e.g. SNOMED CT, ATC, LOINC, ICD-10)

Value Sets

Concept Maps

For the sake of this quick start guide, we will follow along the path of how to create a SNOMED CT code system, import content and query concepts based on different criteria.

If we take a look at eg. the list of known code systems, we get an empty result set:

To import SNOMED CT content, we have to create a code system first using the following request:

The request body includes:

The code system identifier (SNOMEDCT)

Various pieces of metadata offering a human-readable title, status, contact information, URL and OID for identification, etc.

The tooling identifier snomed that points to the repository that will store content

If the request succeeds the server returns a 201 Created response with a Location header value pointing to the created terminology resource's location.

We can verify that the code system has been registered correctly when following that Location header value (ℹ️ NOTE: pretty is added to the request to format the response):

The expected response is:

In addition to the submitted values, you will find that additional administrative properties also appear in the output. One example is branchPath which specifies the working branch of the code system within the repository.

The code system now exists but is empty. To verify this claim, we can list concepts using either Snow Owl's native API tailored for SNOMED CT or the standardized FHIR API for a representation that is uniform across different kinds of code systems – for the sake of simplicity, we will use the former on the next page.

When a new Snow Owl Terminology Server release is available we recommend performing the following steps.

New releases are going to be distributed the same way: a docker stack and its configuration within an archive.

It is advised to decompress the new release files to a temporary folder and compare the contents of ./snow-owl/docker .

[root@host]# diff /opt/snow-owl/docker/ /opt/new-snow-owl-release/snow-owl/docker/

Common subdirectories: /opt/snow-owl/docker/configs and /opt/new-snow-owl-release/snow-owl/docker/configs

diff /opt/snow-owl/docker/.env /opt/new-snow-owl-release/snow-owl/docker/.env

10c10

< ELASTICSEARCH_VERSION=7.16.3

---

> ELASTICSEARCH_VERSION=7.17.1

24c24

< SNOWOWL_VERSION=8.1.0

---

> SNOWOWL_VERSION=8.1.1

The changes usually are restricted to version numbers in the .env file. In such cases, it is equally acceptable to overwrite the contents of the ./snow-owl/docker folder as is or cherry-pick the necessary modifications by hand.

Once the new version of the files is in place it is sufficient to just issue the following commands, an explicit stop of the service is not even required (in the folder ./snow-owl/docker):

docker compose pull

docker compose up -dDo not usedocker compose restart because it won't pick up any .yml or .env file changes. See the explanation in the .

This guide contains all the necessary details for installing the Snow Owl Terminology Server in a production environment. The following sections will guide you on how to:

Most operating systems try to use as much memory as possible for file system caches and eagerly swap out unused application memory. This can result in parts of the JVM heap or even its executable pages being swapped out to disk.

Swapping is very bad for performance, and should be avoided at all costs. It can cause garbage collections to last for minutes instead of milliseconds and can cause services to respond slowly or even time out.

There are two approaches to disabling swapping. The preferred option is to completely disable swap, but if this is not an option, you can minimize swappiness.

Usually Snow Owl is the only service running on a box, and its memory usage is controlled by the JVM options. There should be no need to have swap enabled.

Snow Owl is provided in the following package formats:

(Pro feature)

This page describes the terminology standards offered publicly by the Swedish National Board of Health and Welfare:

(Pro feature)

First, create the ICD-10-SE Code System:

Then, import the classification content from a ClaML file (generated by B2i Healthcare, ):

And last, create a new version to mark the content:

(Pro feature)

First, create the ICF Code System:

Then, using column mapping import the concepts from the official TSV file:

And last, create a new version to mark the content:

Now that we have our instance up and running, the next step is to understand how to communicate with it. Fortunately, Snow Owl provides very comprehensive and powerful APIs to interact with your instance.

Among the few things that can be done with the API are as follows:

Perform CRUD (Create, Read, Update, and Delete) and search operations against your terminology resources

An orderly shutdown of Snow Owl ensures that Snow Owl has a chance to clean up and close outstanding resources. For example, an instance that is shutdown in an orderly fashion will initiate an orderly shutdown of the embedded Elasticsearch instance, gracefully close and disconnect connections and perform other related cleanup activities. You can help ensure an orderly shutdown by properly stopping Snow Owl.

If you’re running Snow Owl as a service, you can stop Snow Owl via the service management functionality provided by your installation.

If you’re running Snow Owl directly, you can stop Snow Owl by sending Ctrl-C if you’re running Snow Owl in the console, or by invoking the provided shutdown script as follows:

Snow Owl (with embedded Elasticsearch) uses a lot of file descriptors or file handles. Running out of file descriptors can be disastrous and will most probably lead to data loss. Make sure to increase the limit on the number of open files descriptors for the user running Snow Owl to 65,536 or higher.

For the .zip and .tar.gz packages, set ulimit -n 65536 as root before starting Snow Owl, or set nofile to

Execute advanced search operations such as paging, sorting, filtering, scripting, aggregations, and many others

Administer your instance data

Check your instance health, status, and statistics

Snow Owl is both a simple and complex product. We’ve so far learned the basics of what it is, how to look inside of it, and how to work with it using some of the available APIs. Hopefully, this tutorial has given you a better understanding of what Snow Owl is and more importantly, inspired you to further experiment with the rest of its great features!

65536/etc/security/limits.confRPM and Debian packages already default the maximum number of file descriptors to 65536 and do not require further configuration.

Package

Description

zip/tar.gz

The zip and tar.gz packages are suitable for installation on any system and are the easiest choice for getting started with Snow Owl on most systems.

Install Snow Owl with tar.gz or zip

rpm

The rpm package is suitable for installation on Red Hat, Centos, SLES, OpenSuSE and other RPM-based systems. RPMs may be downloaded from the Downloads section.

Install Snow Owl with RPM

deb

The deb package is suitable for Debian, Ubuntu, and other Debian-based systems. Debian packages may be downloaded from the Downloads section.

Install Snow Owl with Debian Package

Additional code system settings stored as key-value pairs, mostly used as fall back values when not explicitly specified in a given API call targeting this resource

POST /codesystems

{

"id": "ICD10SE",

"url": "http://hl7.org/fhir/sid/icd-10-se",

"title": "ICD-10-SE",

"language": "se",

"description": "# Internationell statistisk klassifikation av sjukdomar och relaterade hälsoproblem (ICD-10-SE)",

"status": "active",

"owner": "ownerUserId",

"copyright": "",

"contact": "https://www.socialstyrelsen.se/statistik-och-data/klassifikationer-och-koder/kodtextfiler/",

"oid": "1.2.752.116.1.1.1",

"toolingId": "icd10",

"settings": {

"publisher": "Socialstyrelsen",

"isPublic": true

}

}POST /icd10/ICD10SE/classes/import -F "file=@ICD-10-SE_2024_generated.xml"POST /versions

{

"resource": "codesystems/ICD10SE",

"version": "2024-01-01",

"description": "2024-01-01 release",

"effectiveTime": "2024-01-01"

}POST /codesystems

{

"id": "icf",

"url": "http://klassifikationer.socialstyrelsen.se/icf",

"title": "ICF",

"language": "se",

"description": "# Internationell klassifikation av funktionstillstånd, funktionshinder och hälsa",

"status": "active",

"contact": "[email protected] - Avdelningen för register och statistik, Enheten för klassifikationer och terminologi",

"owner": "ownerUserId",

"oid": "1.2.752.116.1.1.3",

"toolingId": "lcs",

"settings": {

"publisher": "Socialstyrelsen",

"isPublic": true

}

}POST /lcs/icf/import?idColumn=Kod&ptColumn=Titel&synonymColumns=Beskrivning&synonymColumns=Alternativ%20titel&parentColumn=Överordnad%20kod&locale=se -F "[email protected]"POST /versions

{

"resource": "codesystems/icf",

"version": "2024-01-01",

"description": "2024-01-01 release",

"effectiveTime": "2024-01-01"

}./bin/shutdownOne of Snow Owl's powerful features is the ability to list concepts matching a user-specified query expression using SNOMED International's Expression Constraint Language (ECL) syntax. If you would like to know more about the language itself, visit the documentation on the official site.

In this example, we list the direct descendants of the root concept using the ECL expression <!138875005 (via the ecl query parameter), and also limit the result set to a single item using the limit query parameter:

curl -u "test:test" 'http://localhost:8080/snowowl/snomedct/SNOMEDCT/concepts?ecl=%3C!138875005&limit=1&pretty'As no query parameter in this request would make Snow Owl differentiate between "better" and "worse" results (eg. a search term to match), concepts in the response will be sorted by identifier.

The item returned is, indeed, one of the top-level concepts in SNOMED CT:

105590001 |Substance|

{

"items": [ {

"id": "105590001",

"released": true,

"active": true,

"effectiveTime": "20020131",

"moduleId": "900000000000207008",

"iconId": "substance",

"score": 0.0,

"memberOf": [ "723560006", "733073007", "900000000000497000" ],

"activeMemberOf": [ "723560006", "733073007", "900000000000497000" ],

"definitionStatus": {

"id": "900000000000074008"

},

"subclassDefinitionStatus": "NON_DISJOINT_SUBCLASSES",

"ancestorIds": [ "-1" ],

"parentIds": [ "138875005" ],

"statedAncestorIds": [ "-1" ],

"statedParentIds": [ "138875005" ],

"definitionStatusId": "900000000000074008"

} ],

"searchAfter": "AoEpMTA1NTkwMDAx",

"limit": 1,

"total": 19

}The number 19 in property total suggests that additional matches exist that were not included in the response this time.

The preferred method of setting JVM options (including system properties and JVM flags) is via the SO_JAVA_OPTS environment variable. For instance:

export SO_JAVA_OPTS="$SO_JAVA_OPTS -Djava.io.tmpdir=/path/to/temp/dir"

./bin/startupWhen using the RPM or Debian packages, SO_JAVA_OPTS can be specified in the system configuration file.

(Pro feature)

As with every other resource in Snow Owl, a LOINC Code System needs to be created using the CodeSystems API first:

POST /codesystems

{

"id": "LOINC",

"url": "http://hl7.org/fhir/sid/loinc",

"title": "LOINC",

"language": "en",

"description": "LOINC is a freely available international standard for tests, measurements, and observations",

"status": "active",

"copyright": "This material contains content from LOINC (http://loinc.org). LOINC is copyright ©1995-2023, Regenstrief Institute, Inc. and the Logical Observation Identifiers Names and Codes (LOINC) Committee and is available at no cost under the license at http://loinc.org/license. LOINC® is a registered United States trademark of Regenstrief Institute, Inc.",

"owner": "ownerUserId",

"contact": "https://loinc.org/",

"oid": "2.16.840.1.113883.6.1",

"toolingId": "loinc",

"settings": {

"publisher": "Regenstrief Institute, Inc.",

}

}Then, an official release file can be imported via the following request:

POST /loinc/LOINC/import -F "file=@Loinc_2.77.zip"And last, create a new version to tag the content:

POST /versions

{

"resource": "codesystems/LOINC",

"version": "2.77",

"description": "LOINC 2.77 2024-02-27 release",

"effectiveTime": "2024-02-27"

}On Linux systems, you can disable swap temporarily by running:

To disable it permanently, you will need to edit the /etc/fstab file and comment out any lines that contain the word swap.

Another option available on Linux systems is to ensure that the sysctl value vm.swappiness is set to 1. This reduces the kernel’s tendency to swap and should not lead to swapping under normal circumstances, while still allowing the whole system to swap in emergency conditions.

sudo swapoff -a# sysctl settings, to be added to /etc/sysctl.conf or equivalent

vm.swappiness = 1

vm.max_map_count = 262144curl -u "test:test" http://localhost:8080/snowowl/codesystems?pretty{

"items" : [ ],

"limit" : 0,

"total" : 0

}curl -X POST \

-u "test:test" \

-H "Content-type: application/json" \

http://localhost:8080/snowowl/codesystems \

-d '{

"id": "SNOMEDCT",

"url": "http://snomed.info/sct/900000000000207008",

"title": "SNOMED CT International Edition",

"description": "SNOMED CT International Edition",

"status": "active",

"copyright": "(C) 2025 International Health Terminology Standards Development Organisation 2002-2023. All rights reserved.",

"contact": "https://snomed.org",

"oid": "2.16.840.1.113883.6.96",

"toolingId": "snomed",

"settings": {

"moduleIds": [

"900000000000207008",

"900000000000012004"

],

"locales": [

"en-x-900000000000508004",

"en-x-900000000000509007"

],

"languages": [

{

"languageTag": "en",

"languageRefSetIds": [

"900000000000509007",

"900000000000508004"

]

},

{

"languageTag": "en-us",

"languageRefSetIds": [

"900000000000509007"

]

},

{

"languageTag": "en-gb",

"languageRefSetIds": [

"900000000000508004"

]

}

],

"publisher": "SNOMED International",

"namespace": "INT",

"namespaceConceptId": "373872000",

"maintainerType": "SNOMED_INTERNATIONAL"

}

}'curl -u "test:test" http://localhost:8080/snowowl/codesystems/SNOMEDCT?pretty{

"id": "SNOMEDCT",

"url": "http://snomed.info/sct/900000000000207008",

"title": "SNOMED CT International Edition",

"language": "en",

...

"branchPath": "MAIN/SNOMEDCT",

...

}Terminology Server releases are shared with customers through custom download URLs. The downloaded artifact is a Linux (tar.gz) archive that contains:

an initial folder structure

the configuration files for all services

a docker-compose.yml file that brings together the entire technology stack to run and manage the service

the credentials required to pull our proprietary docker images

As a best practice, it is advised to extract the content of the archive under /opt. So the deployment folder will be /opt/snow-owl. The docker-compose setup will rely on this path, however, if required it can be changed by editing the ./snow-owl/docker/.env file later on (see DEPLOYMENT_FOLDER environment variable).

When decompressing the archive it is important to use the --same-owner and --preserve-permissions options so the docker containers can access the files and folders appropriately.

The next page will describe the content of the release package in more detail.

Some settings may require attention before moving to production. While the steps below may not necessitate any action, there are cases where the host running both Snow Owl and Elasticsearch will require fine-tuning.

Some system-level settings need to be checked before deploying your own Elasticsearch in production.

Following this link there is an extensive guide on what to verify, but usually, it comes down to these items:

By default, Snow Owl runs inside the container as user snowowl using uid:gid 1000:1000.

If you are bind-mounting a local directory or file:

ensure it is readable by the user mentioned above

ensure that settings, data and log directories are writable as well

A good strategy is to grant group access to gid 1000 or 0 for the local directory.

In case the file system of the docker service on the host is different from what the Snow Owl deployment uses, it could be worthwhile to bind-mount Snow Owl's temporary working folder to a path that has excellent I/O performance. E.g.:

the root file system / is backed by a block storage that purposefully has lower I/O performance, this is the file system used by the docker service.

the deployment folder /opt/snow-owl is backed by a fast local SSD

The definition of the snowowl service in the docker compose file should be amended like this:

The Terminology Server is recommended to be installed on x86_64 / amd64 Linux operating systems where Docker Engine is available. See the list of supported architectures by Docker.

Here is the list of distributions that we suggest in the order of recommendation:

Ubuntu LTS releases

Debian LTS releases

CentOS 7 (deprecated)

It is possible to install the server release package on other distributions but bear in mind that there might be limitations.

Before starting the production deployment of the Terminology Server make sure that the following packages are installed and configured properly:

Docker Engine

ability to execute bash scripts

In case a reverse proxy is used, the Terminology Server requires two ports to be opened either towards the intranet or the internet (depending on usage):

http: port 80

https: port 443

In case there is no reverse proxy installed, the following port must be opened to be able to access the server's REST API:

http: port 8080

By default, Snow Owl includes the OSS version of Elasticsearch and runs it in embedded mode to store terminology data and make it available for search. This is convenient for single-node environments (eg. for evaluation, testing and development), but it might not be sufficient when you go into production.

To configure Snow Owl to connect to an Elasticsearch cluster, change the clusterUrl property in the snowowl.yml configuration file:

The value for this setting should be a valid HTTP URL point to the HTTP API of your Elasticsearch cluster, which by default runs on port 9200.

If you are using the .zip or .tar.gz archives, the data and logs directories are sub-folders of $SO_HOME. If these important folders are left in their default locations, there is a high risk of them being deleted while upgrading Snow Owl to a new version.

In production use, you will almost certainly want to change the locations of the data and log folders.

The RPM and Debian distributions already use custom paths for data and logs.

To allow clients to connect to Snow Owl, make sure you open access to the following ports:

8080/TCP:: Used by Snow Owl Server's REST API for HTTP access

8443/TCP:: Used by Snow Owl Server's REST API for HTTPS access

2036/TCP:: Used by the Net4J binary protocol connecting Snow Owl clients to the server

By default, Snow Owl tells the JVM to use a heap with a minimum and maximum size of 2 GB. When moving to production, it is important to configure heap size to ensure that Snow Owl has enough heap available.

To configure the heap size settings, change the -Xms and -Xmx settings in the SO_JAVA_OPTS environment variable.

The value for these setting depends on the amount of RAM available on your server and whether you are running Elasticsearch on the some node as Snow Owl (either embedded or as a service) or running it in its own cluster. Good rules of thumb are:

Set the minimum heap size (Xms) and maximum heap size (Xmx) to be equal to each other.

Too much heap can subject to long garbage collection pauses.

Set Xmx to no more than 50% of your physical RAM, to ensure that there is enough physical RAM left for kernel file system caches.

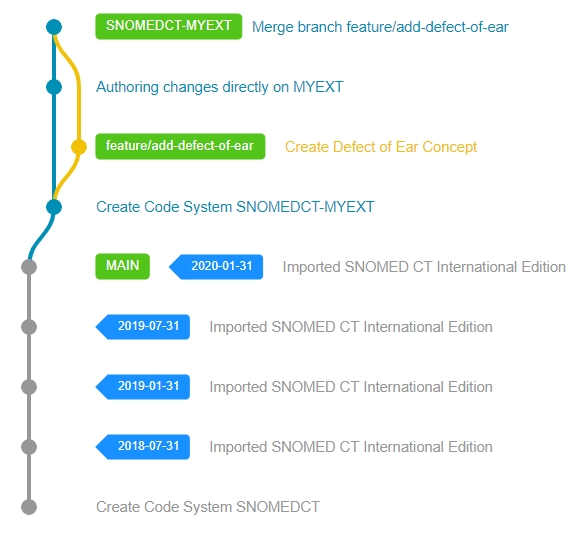

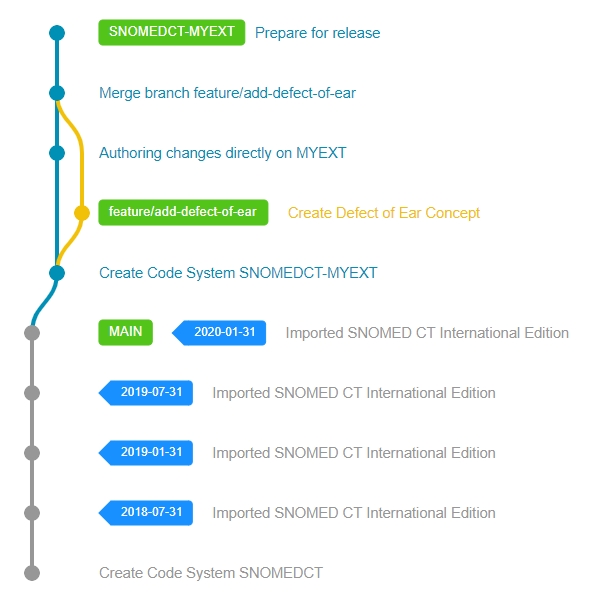

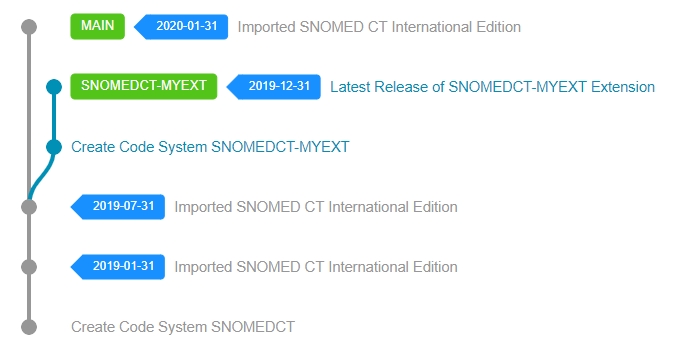

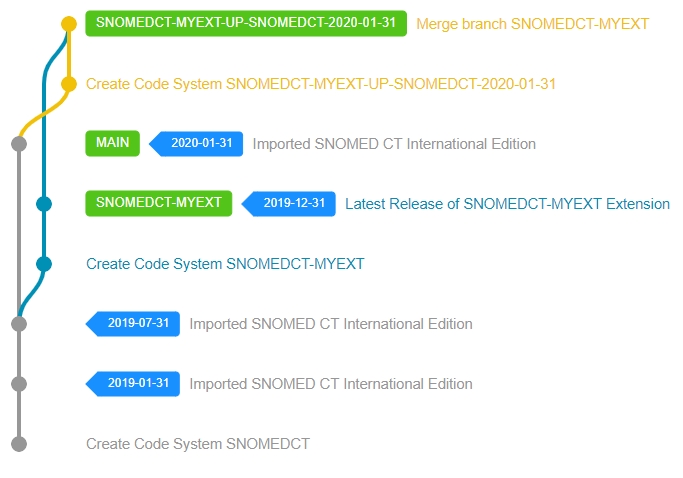

When an Extension reaches the end of its current development cycle, it needs to be prepared for release and distribution.

All planned content changes that are still on their dedicated branch either need to be integrated with the main development version or removed from the scope of the next release.

After all development branches have been merged and integrated with the main work-in-progress version, the Extension needs to be prepared for release. This usually involves last minute fixes, running quality checks and validation rules and generating the final necessary normal form of the Extension.

When all necessary steps have been performed successfully, a new Code System Version needs to be created in Snow Owl to represent the latest release. The versioning process will assign the requested effectiveTime to all unpublished components, update the necessary Metadata reference sets (like the Module Dependency Reference Set) and finally create a version branch to reference this release later.

After a successful release, an RF2 Release Package needs to be generated for downstream consumers of your Extension. Snow Owl can generate this final RF2 Release Packages for the newly released version via the RF2 Export API.

Full list of steps to perform before spinning up the service:

Extract the Terminology Server release archive to a folder. E.g. /opt/snow-owl

(Optional) Obtain an SSL certificate

Make sure a DNS A record is routed to the host's public IP address

Go into the folder ./snow-owl/docker/cert

Execute the ./init-certificate.sh script:

(Optional) Configure access for managed Elasticsearch Cluster (elastic.co)

(Optional) Extract dataset to ./snow-owl/resources where folder structure should look like ./snow-owl/resources/indexes/nodes/0 at the end.

Verify file ownership to be UID=1000 and GID=0:

Check any credentials or settings that need to be changed in ./snow-owl/docker/.env

Authenticate with our private docker registry while in the folder ./snow-owl/docker:

Issue a pull (in folder ./snow-owl/docker)

Spin up the service (in the folder ./snow-owl/docker)

Verify that the REST API of the Terminology Server is available at:

With SSL: https://snow-owl.example.com/snowowl

Without SSL: http://hostname:8080/snowowl

Verify that the server and cluster status is GREEN by querying the following REST API endpoint:

With SSL:

Without SSL:

Enjoy using the Snow Owl Terminology Server 🎉

Authoring is the process by which content is created in an extension in accordance with a set of authoring principles. These principles ensure the quality of content and referential integrity between content in the extension and content in the International Edition (the principles are set by SNOMED International, can be found here).

During the extension development process authors are:

creating, modifying or inactivating content according to editorial principles and policies

running validation processes to verify the quality and integrity of their Extension

classifying their authored content with an OWL Reasoner to produce its distribution normal form

The authors directly (via the available REST and FHIR APIs) or indirectly (via user interfaces, scripts, etc.) work with the Snow Owl Terminology Server to make the necessary changes for the next planned Extension release.

Authors often require a dedicated editing environment where they can make the necessary changes and let others review the changes they have made, so errors and issues can be corrected before integrating the change with the rest of the Extension. Similarly to how SNOMED CT Extensions are separated from the SNOMED CT International Edition and other dependencies, this can be achieved by using branches.

- to create and merge branches

- to compare branches

To let authors make the necessary changes they need, Snow Owl offers the following SNOMED CT component endpoints to work with:

- to create and edit SNOMED CT Concepts

Description API - to create and edit SNOMED CT Descriptions

Relationship API - to create and edit SNOMED CT Relationships

Reference Set API - to create and edit SNOMED CT Reference Sets

To verify quality and integrity of the changes they have made, authors often generate reports and make further fixes according to the received responses. In Snow Owl, reports and rules can be represented with validation queries and scripts.

Validation API - to run validation rules and fetch their reported issues on a per branch basis

Last but not least, authors run an OWL Reasoner to classify their changes and generate the necessary normal form of their Extension. The Classification API provides support for running these reasoner instances and generating the necessary normal form.

Snow Owl uses SLF4J and Logback for logging.

The logging configuration file (serviceability.xml) can be used to configure Snow Owl logging. The logging configuration file location depends on your installation method, by default it is located in the ${SO_HOME}/configuration folder.

Extensive information on how to customize logging and all the supported appenders can be found on the Logback documentation.

Snow Owl supports the generation of structured application logs via the .

To enable it uncomment the example snippet provided in the console appender section in the configuration/serviceability.xml file or copy and paste it from here:

For additional config options visit the Logstash encoder GitHub page or .

On top of single Edition/Extension distribution and authoring, Snow Owl provides full support for multi-SNOMED CT distribution and authoring even if the Extensions depend on different versions of the SNOMED CT International Edition.

To achieve a deployment like this you need to perform the same initialization steps for each desired SNOMED CT Extension as if it were a single extension scenario (see single extension). Development and maintenance of each managed extension can happen in parallel without affecting one or the other. Each of them can have their own release cycles, maintenance and upgrade schedules, and so on.

After you have initialized your Snow Owl instance with the Extensions you'd like to maintain the next steps are:

Before importing an RF2 file you can check existing content by for example looking up the available SNOMED CT Concepts in the created SNOMED CT resource:

curl -u "test:test" http://localhost:8080/snowowl/snomedct/SNOMEDCT/concepts?prettyAs the response also indicates, the terminology is empty:

{

"items": [ ],

"limit": 50,

"total": 0

}Let's import an RF2 archive using its SNAPSHOT content (see release types here) so that we can further explore the available SNOMED CT APIs. To start the import process, send the following request:

Curl will display the entire interaction between it and the server, including many request and response headers. We are interested in these two (response) rows in particular:

The first one indicates that the file was uploaded successfully and a job has been created to track the progress of the import, while the second row indicates the location of this import job.

Depending on the size and type of the RF2 package, hardware, and Snow Owl configuration, RF2 imports might take a few hours to complete (but usually less). Official SNAPSHOT distributions can be imported in less than 30 minutes by allocating at least 6 GB of heap size to Snow Owl and configuring it to use a solid-state disk for the data directory.

The process itself is asynchronous and its status can be checked by periodically sending a GET request to the location returned in the response header:

The expected response while the import is running:

Upon completion, you should receive a different response that lists component identifiers visited during the import as well as any defects encountered in uploaded release files:

The Terminology Server release package contains a built-in solution to perform rolling and permanent data backups. The docker stack has a specialized container (called snow-owl-backup) that is responsible for creating scheduled backups of:

the Elasticsearch indices

the OpenLDAP database (if present)

For the Elasticsearch indices, the backup container uses the . Snapshots are labeled in a predefined format with timestamps. E.g. snowowl-daily-20220324030001

The OpenLDAP database is backed up by compressing the contents of the folder under ./snow-owl/ldap. Filenames are generated using the name of the corresponding Elasticsearch snapshot. E.g. snowowl-daily-20220324030001.tar.gz.

Backup Window: when a backup operation is running the Terminology Server blocks all write operations on the Elasticsearch indices. This is to prevent data loss and have consistent backups.

Backup Duration: the very first backup of an Elasticsearch cluster takes a bit more time (depending on the size and I/O performance but between 20 minutes - 40 minutes), subsequent backups should take significantly less: 1 - 5 minutes.

Daily backups are rolling backups, scheduled, and cleaned up based on the settings specified in the ./snow-owl/docker/.env file. Here is a summary of the important settings that could be changed.

To store backups redundantly it is advised to mount a remote file share to a local path on the host. By default, this folder is configured to be at ./snow-owl/backup. It contains:

the snapshot files of the Elasticsearch cluster

the backup files of the OpenLDAP database

extra configuration files

Backup jobs are scheduled by cron, so cron expressions can be defined here to specify the time a daily backup should happen.

This is used to tell the backup container how many daily backups must be kept.

Let's say we have an external file share mounted to /mnt/external_folder. There is a need to create daily backups after each working day, during the night at 2:00 am. Only the last two-weeks-worth of data should be kept (assuming 5 working days each week).

It is also possible to perform backups occasionally, e.g. before versioning an important SNOMED CT release or before a Terminology Server version upgrade. These backups are kept until manually removed.

To create such backups the following command needs to be executed using the backup container's terminal:

The script will create a snapshot backup of the Elasticsearch data with a label snowowl-my-backup-label-20220405030002 and an archive that contains the database of the OpenLDAP server with the name snowowl-my-backup-label-20220405030002.tar.gz.

Snow Owl® is a highly scalable terminology server with revision-control capabilities and collaborative authoring platform features. It allows you to store, search, and author high volumes of terminology artifacts quickly and efficiently.

If you’d like to see Snow Owl in action, the 🌎 provides a managed terminology server and high-quality terminology content management from your web browser.

The most common use case to consume a SNOMED CT Release Package is to import it directly into a Terminology Server (like Snow Owl) and make it available as read-only content for both human and machine access (via REST and FHIR APIs).

Since Snow Owl by default comes with a pre-initialized SNOMED CT Code System called SNOMEDCT, it is just a single call to import the official RF2 package using the The import by default creates a Code System Version for each SNOMED CT Effective Date available in the supplied RF2 package. After a successful import the content is immediately available via REST and FHIR APIs.

You can manage and authenticate users with the built-in file realm. All the data about the users for the file realm is stored in the users file. The file is located in SO_PATH_CONF and is read on startup.

You need to explicitly select the file realm in the snowowl.yml configuration file in order to use it for authentication.

In the above configuration the file realm is using the users file to read your users from. Each row in the file represents a username and password delimited by : character. The passwords are BCrypt encrypted hashes. The default users file comes with a default snowowl user with the default snowowl

In certain cases, a pre-built dataset is also shipped together with the Terminology Server. This is to ease the initial setup procedure and get going fast.

Two categories make up Snow Owl's Reference Set API:

Reference Sets category to get, search, create and modify reference sets

Reference Set Members category to get, search, create and modify reference set members

Basic operations like create, update, delete are supported for both category.

Comparison for current terminology changes committed to a source or target branch can be conducted by creating a compare resource.

A review identifier can be added to merge requests as an optional property. If the source or target branch state is different from the values captured when creating the review, the merge/rebase attempt will be rejected. This can happen, for example, when additional commits are added to the source or the target branch while a review is in progress; the review resource state becomes STALE in such cases.

Reviews and concept change sets have a limited lifetime. CURRENT reviews are kept for 15 minutes, while review objects in any other states are valid for 5 minutes by default. The values can be changed in the server's configuration file.

repository:

index:

clusterUrl: http://your.es.cluster:9200 # the ES cluster URL

clusterUsername: snowowl # Optional username to connect to a protected ES cluster

clusterPassword: snowowl_password # Optional password to connect to a protected ES clustercurl -v -u "test:test" http://localhost:8080/snowowl/snomedct/SNOMEDCT/import?type=snapshot\&createVersions=false \

-F file=@SnomedCT_InternationalRF2_PRODUCTION.zip< HTTP/1.1 201 Created

< Location: http://localhost:8080/snowowl/snomedct/SNOMEDCT/import/107f6efa69886bfdd73db5586dcf0e15f738efedtar --extract \

--gzip \

--verbose \

--same-owner \

--preserve-permissions \

--file=/path/to/snow-owl-linux-x86_64.tar.gz \

--directory=/opt/Snow Owl connecting to a remote Elasticsearch cluster requires less memory, but make sure you still allocate enough for your use cases (classification, batch processing, etc.).

curl -u "test:test" http://localhost:8080/snowowl/snomedct/SNOMEDCT/import/107f6efa69886bfdd73db5586dcf0e15f738efed?pretty{

"id": "107f6efa69886bfdd73db5586dcf0e15f738efed",

"status": "RUNNING"

}To simplify file realm configuration, the Snow Owl CLI comes with a command to add a user to the file realm (snowowl users add). See the command help manual (-h option) for further details.

The file security realm does NOT support the Authorization formats at the moment. If you are interested in configuring role-based access control for your users, it is recommended to switch to the LDAP security realm.

identity:

providers:

- file:

name: usersThe open-source version of Snow Owl can be downloaded as a docker image. The list of all published tags and additional details about the image can be found in Snow Owl's public Docker Hub repository.

To initiate a Snow Owl deployment, the only requirements are:

the Docker Engine (see install guide)

a terminal

git (optional, see install guide)

Setting up a fully operational Snow Owl server depends on which architecture is supported by the Docker Engine. Thankfully it has a wide variety of platforms such as Linux, Windows, and Mac.

There is a preassembled docker compose configuration in Snow Owl's GitHub repository. This set of files can be used to start up a Snow Owl terminology server and its corresponding data layer, an Elasticsearch instance.

To get ahold of the necessary files it is required to either download the repository content (see instructions here) or clone the git repository using the git command line tool:

Once the clone is finished find the directory containing the compose example:

While in this directory start the services using docker compose:

The service snowowl listens on localhost:8080 while it talks to the elasticsearch service over an isolated Docker network.

To stop the application, type docker compose down. Data volumes/mounts will remain on disk, so it's possible to start the application again with the same data using docker compose up.

A default user is configured to experiment with features that would require authentication and authorization. The username is test and its password is the same.

Here is the full content of the compose file:

Reducing the memory settings of the docker stack is feasible when Snow Owl is assessed with limited terminologies and basic operations such as term searches. An applicable minimum value should be no less than 2 GB for each service.

The memory settings of Elasticsearch can be changed by adapting the following line in the docker-compose.yml file to e.g.:

The memory settings of Snow Owl can be changed by adapting the following line in the docker-compose.yml file to e.g.:

Snow Owl's status is exposed via a health REST API endpoint. To see if everything went well, run the following command:

The expected response is

The response contains the installed version along with a list of repositories, their overall health (eg. "snomed" with health "GREEN"), and their associated indices and status (eg. "snomed-relationship" with status "GREEN").

Snow Owl can be started from the command line as follows:

By default, Snow Owl runs in the foreground, prints some of its logs to the standard output (stdout), and can be stopped by pressing Ctrl-C.

To run Snow Owl as a daemon, use the following command:

Log messages can be found in the $SO_HOME/serviceability/logs/ directory.

Snow Owl is not started automatically after installation. How to start and stop Snow Owl depends on whether your system uses SysV init or systemd (used by newer distributions). You can tell which is being used by running this command:

Use the chkconfig command to configure Snow Owl to start automatically when the system boots up:

Snow Owl can be started and stopped using the service command:

If Snow Owl fails to start for any reason, it will print the reason for failure to STDOUT. Log files can be found in /var/log/snowowl/.

To configure Snow Owl to start automatically when the system boots up, run the following commands:

Snow Owl can be started and stopped as follows:

These commands provide no feedback as to whether Snow Owl was started successfully or not. Instead, this information will be written in the log files located in /var/log/snowowl/.

use snapshot-restore

use Snow Owl to rebuild the data to the remote cluster

These datasets are the compressed form of the Elasticsearch data folder which follows the same structure. Except for having a top folder called indexes . This is the same folder as in ./snow-owl/resources/indexes . So to be able to load the dataset one should just extract the contents of the dataset archive to this path.

Make sure to validate the file ownership of the indexes folder after decompression. Elasticsearch requires UID=1000 and GID=0 to be set for its data folder.

tar --extract \

--gzip \

--verbose \

--same-owner \

--preserve-permissions \

--file=snow-owl-resources.tar.gz \

--directory=/opt/snow-owl/resources/

chown -R 1000:0 /opt/snow-owl/resourcesserviceability.xml for configuring Snow Owl logging

elasticsearch.yml for configuring the underlying Elasticsearch instance in case of embedded deployments

These files are located in the config directory, whose default location depends on whether or not the installation is from an archive distribution (tar.gz or zip) or a package distribution (Debian or RPM packages).

For the archive distributions, the config directory location defaults to $SO_PATH_HOME/configuration. The location of the config directory can be changed via the SO_PATH_CONF environment variable as follows:

Alternatively, you can export the SO_PATH_CONF environment variable via the command line or via your shell profile.

For the package distributions, the config directory location defaults to /etc/snowowl. The location of the config directory can also be changed via the SO_PATH_CONF environment variable, but note that setting this in your shell is not sufficient. Instead, this variable is sourced from /etc/default/snowowl (for the Debian package) and /etc/sysconfig/snowowl (for the RPM package). You will need to edit the SO_PATH_CONF=/etc/snowowl entry in one of these files accordingly to change the config directory location.

The configuration format is YAML. Here is an example of changing the path of the data directory:

Settings can also be flattened as follows:

Environment variables referenced with the ${...} notation within the configuration file will be replaced with the value of the environment variable, for instance:

./snow-owl/docker/configs/snowowl/snowowl.yml:The Snow Owl Terminology Server leverages Elasticssearch's synonym filters. To have this feature work properly with a managed Elasticsearch cluster our custom dictionary has to be uploaded and configured. The synonym file can be found in the release package under ./snow-owl/docker/configs/elasticsearch/synonym.txt. This file needs to be compressed as an zip archive by following this structure:

For the managed Elasticsearch instance this zip file needs to be configured as a bundle extension. The steps required are covered in this guide in great detail.

Once the bundle is configured and the cluster is up we can (re)start the docker stack. In case there are any troubles the Terminology Server will refuse to initialize and let you know what the problem is in its log files.

repository:

index:

socketTimeout: 60000

clusterUrl: https://my-es-cluster.elastic-cloud.com:9243

clusterUsername: my-es-cluster-user

clusterPassword: my-es-cluster-pwdOn top of the basic operations, reference sets and members support actions. Actions have an action property to specify which action to execute, the rest of the JSON properties will be used as body for the Action.

Supported reference set actions are:

sync - synchronize all members of a query type reference set by executing their query and comparing the results with the current members of their referenced target reference set

Supported reference set member actions are:

create - create a reference set member (uses the same body as POST /members)

update - update a reference set member (uses the same body as PUT /members)

delete - delete a reference set member

sync - synchronize a single member by executing the query and comparing the results with the current members of the referenced target reference set

For example the following will sync a query type reference set member's referenced component with the result of the reevaluated member's ESCG query

Members list of a single reference set can be modified by using the following bulk-like update endpoint:

Input

The request body should contain the commitComment property and a request array. The request array must contain actions (see Actions API) that are enabled for the given set of reference set members. Member create actions can omit the referenceSetId parameter, those will use the one defined as path parameter in the URL. For example by using this endpoint you can create, update and delete members of a reference set at once in one single commit.

PUT /:path/refsets/:id/membersFirst, create the KMÅ Code System:

Then, import the concepts from the official TSV file:

And last, create a new version to mark the content:

POST /codesystems

{

"id": "kva-kirurgiska",

"url": "http://klassifikationer.socialstyrelsen.se/kva-kirurgiska",

"title": "KVÅ – kirurgiska åtgärder (KKÅ)",

"language": "se",

"description": "# Klassifikation av kirurgiska åtgärder",

"status": "active",

"owner": "ownerUserId",

"copyright": "",

"contact": "[email protected] - Avdelningen för register och statistik, Enheten för klassifikationer och terminologi",

"oid": "1.2.752.116.1.3.2.3.6",

"toolingId": "lcs",

"settings": {

"publisher": "Socialstyrelsen",

"isPublic": true

}

}POST /lcs/kva-kirurgiska/import?idColumn=Kod&ptColumn=Titel&synonymColumns=Förkortning(ar)&synonymColumns=Beskrivning&parentColumn=Överordnad%20kod&locale=se -F "[email protected]"Response

Terminology components (and in fact any content) can be read from any point in time by using the special path expression: {branch}@{timestamp}. To get the state of a SNOMED CT Concept from the previous comparison on the compareBranch at the returned compareHeadTimestamp, you can use the following request:

Request

Response

To get the state of the same SNOMED CT Concept but on the base branch, you can use the following request:

Request

Response

Additionally, if required to compute what's changed on the component since the creation of the task, it is possible to get back the base version of the changed component by using another special path expression: {branch}^.

Request

Response

POST /compare

{

"baseBranch": "MAIN",

"compareBranch": "MAIN/a",

"limit": 100

}snowowl:

image: b2ihealthcare/snow-owl-<variant>:<version>

...

volumes:

- ./config/snowowl/snowowl.yml:/etc/snowowl/snowowl.yml

- ./config/snowowl/users:/etc/snowowl/users

- ${SNOWOWL_DATA_FOLDER}:/var/lib/snowowl

- ${SNOWOWL_LOG_FOLDER}:/var/log/snowowl

+ - /path/to/folder/with/fast/performance:/usr/share/snowowl/work

ports:

...path:

data: /var/data/snowowl# Set the minimum and maximum heap size to 12 GB.

SO_JAVA_OPTS="-Xms12g -Xmx12g" ./bin/startup./init-certificate.sh -d snow-owl.example.comchmod -R 1000:0 ./snow-owl/docker ./snow-owl/logs ./snow-owl/resourcescat docker_login.txt | docker login -u <username> --password-stdin https://docker.b2ihealthcare.comdocker compose pulldocker compose up -dcurl https://snow-owl.example.com/snowowl/infocurl http://hostname:8080/snowowl/info...

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<timestamp>

<fieldName>timestamp</fieldName>

<timeZone>UTC</timeZone>

</timestamp>

<loggerName>

<fieldName>logger</fieldName>

</loggerName>

<logLevel>

<fieldName>level</fieldName>

</logLevel>

<threadName>

<fieldName>thread</fieldName>

</threadName>

<nestedField>

<fieldName>mdc</fieldName>

<providers>

<mdc />

</providers>

</nestedField>

<stackTrace>

<fieldName>stackTrace</fieldName>

<throwableConverter class="net.logstash.logback.stacktrace.ShortenedThrowableConverter">

<maxDepthPerThrowable>200</maxDepthPerThrowable>

<maxLength>14000</maxLength>

<rootCauseFirst>true</rootCauseFirst>

</throwableConverter>

</stackTrace>

<message />

<throwableClassName>

<fieldName>exceptionClass</fieldName>

</throwableClassName>

</providers>

</encoder>

</appender>

...{

"id": "107f6efa69886bfdd73db5586dcf0e15f738efed",

"status": "FINISHED",

"response": {

"visitedComponents": [ ... ],

"defects": [ ],

"success": true

}

}BACKUP_FOLDER=/mnt/external_folder

NUMBER_OF_DAILY_BACKUPS_TO_KEEP=10

CRON_DAYS=Tue-Sat

CRON_HOURS=2

CRON_MINUTES=0root@host:/# docker exec -it backup bash

root@ad36cfb0448c:/# /backup/backup.sh -l my-backup-labelgit clone https://github.com/b2ihealthcare/snow-owl.gitcd ./snow-owl/docker/composedocker compose up -dservices:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:8.11.1

container_name: elasticsearch

environment:

- "ES_JAVA_OPTS=-Xms6g -Xmx6g"

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

volumes:

- es-data:/usr/share/elasticsearch/data

- ./config/elasticsearch/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- ./config/elasticsearch/synonym.txt:/usr/share/elasticsearch/config/analysis/synonym.txt

healthcheck:

test: curl --fail http://localhost:9200/_cluster/health?wait_for_status=green || exit 1

interval: 1s

timeout: 1s

retries: 60

ports:

- "127.0.0.1:9200:9200"

restart: unless-stopped

snowowl:

image: b2ihealthcare/snow-owl-oss:latest

container_name: snowowl

environment:

- "SO_JAVA_OPTS=-Xms6g -Xmx6g"

- "ELASTICSEARCH_URL=http://elasticsearch:9200"

depends_on:

elasticsearch:

condition: service_healthy

volumes:

- ./config/snowowl/snowowl.yml:/etc/snowowl/snowowl.yml

- ./config/snowowl/users:/etc/snowowl/users # default username and password: test - test

- es-data:/var/lib/snowowl/resources/indexes

ports:

- "8080:8080"

restart: unless-stopped

volumes:

es-data:

driver: local- "ES_JAVA_OPTS=-Xms2g -Xmx2g"- "SO_JAVA_OPTS=-Xms2g -Xmx2g"curl http://localhost:8080/snowowl/info?pretty{

"version": "<version number>",

"description": "You Know, for Terminologies",

"repositories": {

"items": [ {

"id": "snomed",

"health": "GREEN",

"diagnosis": "",

"indices" : [ {

"index": "snomed-relationship",

"status": "GREEN"

}, {

"index": "snomed-commit",

"status": "GREEN"

}, ...

} ]

}

}./bin/startupnohup ./bin/startup > /dev/null &ps -p 1sudo chkconfig --add snowowlsudo -i service snowowl start

sudo -i service snowowl stopsudo /bin/systemctl daemon-reload

sudo /bin/systemctl enable snowowl.servicesudo systemctl start snowowl.service

sudo systemctl stop snowowl.serviceSO_PATH_CONF=/path/to/my/config ./bin/startuppath:

data: /var/lib/snowowlpath.data: /var/lib/snowowlrepository.host: ${HOSTNAME}

repository.port: ${SO_REPOSITORY_PORT}.

└── analysis

└── synonym.txtPOST /members/:id/actions

{

"commitComment": "Sync member's target reference set",

"action": "sync"

}{

"commitComment": "Updating members of my simple type reference set",

"requests": [

{

"action": "create|update|delete|sync",

"action-specific-props": ...

}

]

}POST /versions

{

"resource": "codesystems/kva-kirurgiska",

"version": "2024-01-01",

"description": "2024-01-01 release",

"effectiveTime": "2024-01-01"

}POST /codesystems

{

"id": "kva-medicinska",

"url": "http://klassifikationer.socialstyrelsen.se/kva-medicinska",

"title": "KVÅ – medicinska åtgärder (KMÅ)",

"language": "se",

"description": "# Klassifikation av medicinska åtgärder",

"status": "active",

"owner": "ownerUserId",

"copyright": "",

"contact": "[email protected] - Avdelningen för register och statistik, Enheten för klassifikationer och terminologi",

"oid": "1.2.752.116.1.3.2.3.5",

"toolingId": "lcs",

"settings": {

"publisher": "Socialstyrelsen",

"isPublic": true

}

}POST /lcs/kva-medicinska/import?idColumn=Kod&ptColumn=Titel&synonymColumns=Beskrivning&parentColumn=Överordnad%20kod&locale=se -F "[email protected]"POST /versions

{

"resource": "codesystems/kva-medicinska",

"version": "2024-01-01",

"description": "2024-01-01 release",

"effectiveTime": "2024-01-01"

}Status: 200 OK

{

"baseBranch": "MAIN",

"compareBranch": "MAIN/a",

"compareHeadTimestamp": 1567282434400,

"newComponents": [],

"changedComponents": ["138875005"],

"deletedComponents": [],

"totalNew": 0,

"totalChanged": 1,

"totalDeleted": 0

}GET /snomedct/MAIN@1567282434400/concepts/138875005Status: 200 OK

{

"id": "138875005",

...

}GET /snomedct/MAIN/concepts/138875005Status: 200 OK

{

"id": "138875005",

...

}GET /snomedct/MAIN/a^/concepts/138875005Status: 200 OK

{

"id": "138875005",

...

}Reference Set Member API - to create and edit SNOMED CT Reference Set Members

You work in the healthcare industry and are interested in using a terminology server for browsing, accessing, and distributing components of various terminologies and classifications to third-party consumers. In this case, you can use Snow Owl to load the necessary terminologies and access them via FHIR and proprietary APIs.

You are responsible for maintaining and publishing new versions of a particular terminology. In this case, you can use Snow Owl to access collaboratively and author the terminology content and at the end of your release schedule publish it with confidence and zero errors.

You have an Electronic Health Record system and would like to capture, maintain, and query clinical information in a structured and standardized manner. Your Snow Owl terminology server can integrate with your EHR server via standard APIs to provide the necessary access for both terminology binding and data processing and analytics.

Maintains multiple versions (including unpublished and published) for each terminology artifact and provides APIs to access them all

Independent work branches offer work-in-progress isolation, external business workflow integration, and team collaboration

SNOMED CT terminology support

RF2 Release File Specification as of 2025-05-01

Support for Relationships with concrete values

Official and Custom Reference Sets

Expression Constraint Language v2.2.0 🌎 , 🌎

Compositional Grammar 2.3.1 🌎 , 🌎

Expression Template Language 1.0.0 🌎 , 🌎

LOINC, ICD-10 (+ modifications) and generic CodeSystem support is available when licensed

With its modular design, the server can maintain multiple terminologies (including local codes, mapping sets, and value sets)

FHIR Terminology Service API supporting R4/R4B/R5 in a single deployment 🌎 specification

FHIR CodeSystem, ValueSet and ConceptMap resource type support + operations using a HAPI FHIR wrapper

Dedicated SNOMED CT, ATC, ICD-10, LOINC, Local Code System, Value Set, and Concept Map APIs

CIS API 1.0 🌎

Simple to use plug-in system makes it easy to develop and add new terminology tooling/API or any other functionality

Built on top of 🌎 Elasticsearch (highly scalable, distributed, open source search engine)

All the power of Elasticsearch is available (monitoring, analytics, and many more)

Connect to your existing cluster or use the embedded instance

Elasticsearch version 7 and version 8 clusters are supported

⚠️ Elasticsearch 9 is not supported yet

In March 2015, 🌎 SNOMED International generously licensed the Snow Owl Terminology Server components supporting SNOMED CT. They subsequently made the licensed code available to their members and the global community under an open-source license.

In March 2017, 🌎 NHS Digital licensed the Snow Owl Terminology Server to support the mandatory adoption of SNOMED CT throughout all care settings in the United Kingdom by April 2020. In addition to driving the UK’s clinical terminology efforts by providing a platform to author national clinical codes, Snow Owl will support the maintenance and improvement of the dm+d drug extension which alone is used in over 156 million electronic prescriptions per month. Improvements to the terminology server under this agreement were made available to the global community.

Numerous other organizations have directly or indirectly contributed to Snow Owl, including:

Singapore Ministry of Health

American Dental Association

University of Nebraska Medical Center (USA)

Federal Public Service of Public Health (Belgium)

Danish Health Data Authority

Health and Welfare Information Systems Centre (Estonia)

Department of Health (Ireland)

New Zealand Ministry of Health

Norwegian Directorate of eHealth

Integrated Health Information Systems (Singapore)

National Board of Health and Welfare (Sweden)

eHealth Suisse (Switzerland)

National Library of Medicine (USA)

National Release Centers and other Care Providers provide their own SNOMED CT Edition distribution for third-party consumers in RF2 format. Importing their Edition distribution instead of the International Edition directly into the SNOMEDCT pre-initialized SNOMED CT Code System with the same SNOMED CT RF2 Import API makes both the International Edition (always included in Edition packages) and the National Extension available for read-only access.

The single edition scenario without much effort provides access to any SNOMED CT Edition directly on the pre-initialized SNOMEDCT Code System. It is easy to set up and maintain. Because of its flat structure, it is good for distribution and extension consumers. Although it can be used for authoring in certain scenarios, due to the missing distinction between the International Edition and the Extension, it is not the best choice for extension authoring and maintenance.

Pros:

Good for maintaining the SNOMED CT International Edition

Good for distribution

Simple to set up and maintain

Cons:

Not recommended for extension authoring and maintenance

Not recommended for multi-extension distribution scenarios

Snow Owl is a multi-purpose terminology server with a main focus on SNOMED CT International Edition and its Extensions. Whether you are a producer of a SNOMED CT Extension or a consumer of one, Snow Owl has you covered. As always, feel free to ask your questions regarding any of the content you read here (raise a ticket on GitHub Issues).

Snow Owl uses the following basic concepts to provide authoring and maintenance support for SNOMED CT Extensions.

From the page, we've learned what is a Repository and how Code Systems are defined as part of a Repository.

SNOMED CT Extensions in Snow Owl are Code Systems with their own set of properties and characteristics. With Snow Owl's Code System API, a Code System can be created for each SNOMED CT Extension to easily identify the Code System and its components with a single unique identifier, called the Code System short name. The recommended naming approach when selecting the unique short name identifier is the following:

SNOMED CT International Edition: SNOMEDCT - often included in other editions for distribution purposes

National Release Center (single maintained extension) - SNOMEDCT-US - represents the SNOMED CT United States of America Extension

National Release Center (multiple maintained extensions) - SNOMEDCT-UK-CL, SNOMEDCT-UK-DR

The primary namespace identifier and set of modules and languages can be set during the creation of the Code System, and can be updated later on if required. These properties can be used when users are accessing the terminology server for authoring purposes to provide a seamless authoring experience for the user without them needing to worry about selecting the proper namespace, modules, language tags, etc. (NOTE: this feature is not available yet in the OSS version of Snow Owl)

A Snow Owl Code System can be marked as an extensionOf another Code System, which ties them together, forming a dependency between the two Code Systems. A Code System can have multiple Extension Code Systems, but a Code System can only be extensionOf a single Code System.

In Snow Owl, a Repository maintains a set of branches, and Code Systems are always attached to a dedicated branch. For example, the default root Code Systems are always tied to the default branch, called MAIN. When creating a new Code System, the "working" branchPath can be specified and doing so assigns the branch to the Code System. A Code System cannot be attached to multiple branches at the same time, and a branch can only be assigned to a single Code System in a Repository. Snow Owl's branching infrastructure allows the use of isolated environments for both distribution and authoring workflows, therefore they play a crucial role in SNOMED CT Extension management as well. They also provide the support for seamless upgrade mechanism, which can be done whenever there is a new version available in one of your SNOMED CT Extension's dependent Code Systems.

As in real life, a Code System can have zero or more versions (or with another name, releases). A version is a special branch that is created during the versioning process and makes the currently available latest content accessible later in its current form. Since SNOMED CT Extensions can have releases as well, creating a Code System Version in Snow Owl is a must to produce the release packages.

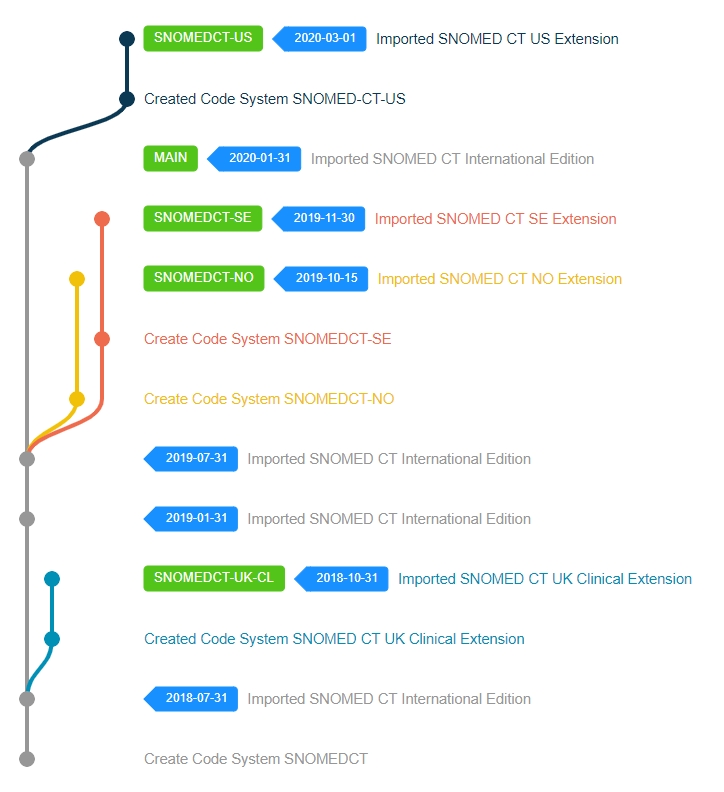

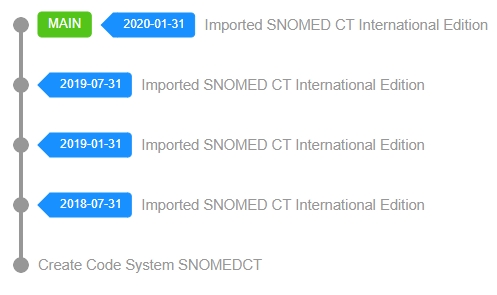

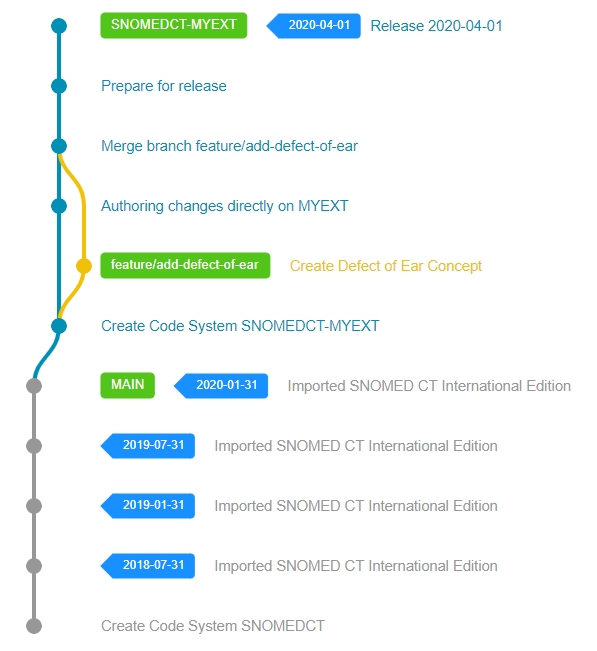

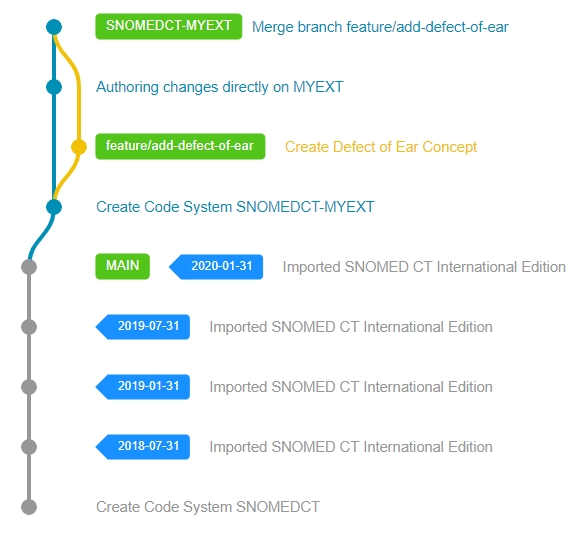

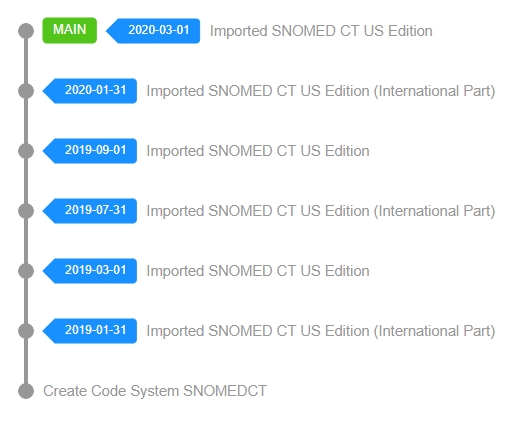

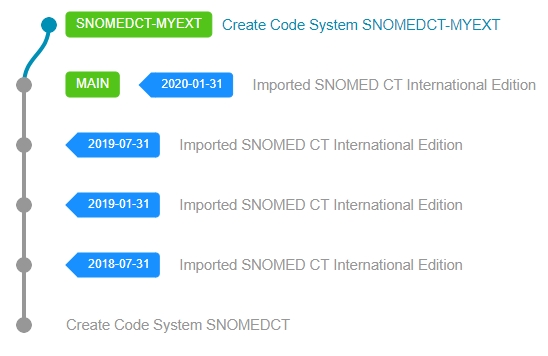

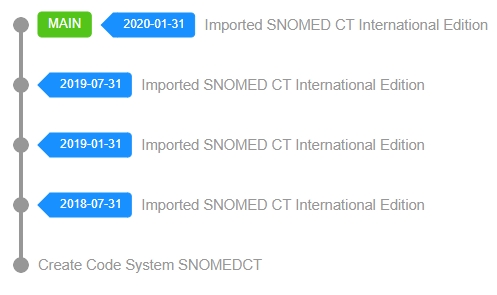

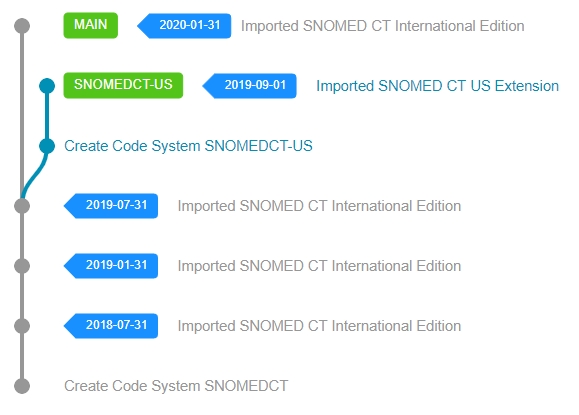

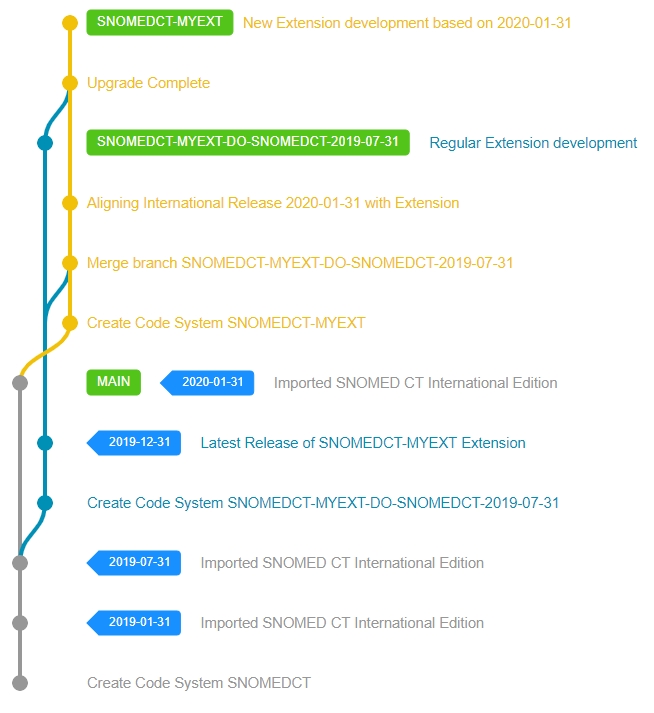

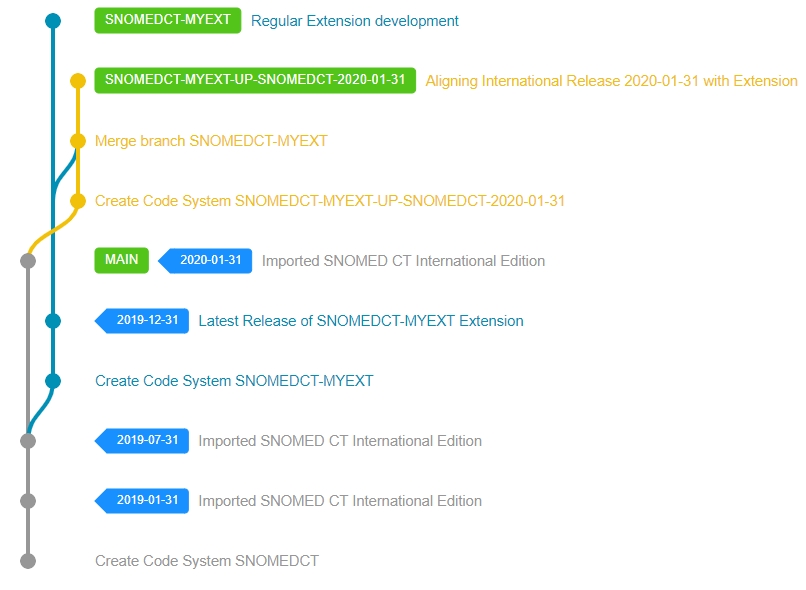

The following image shows the repository content rendered from the available commits, after a successful International Edition import.

Dots represent commits made with the commit message on the right. Green boxes represent where the associated branch's HEAD is currently located. Blue tag labels represent versions created during the commit.

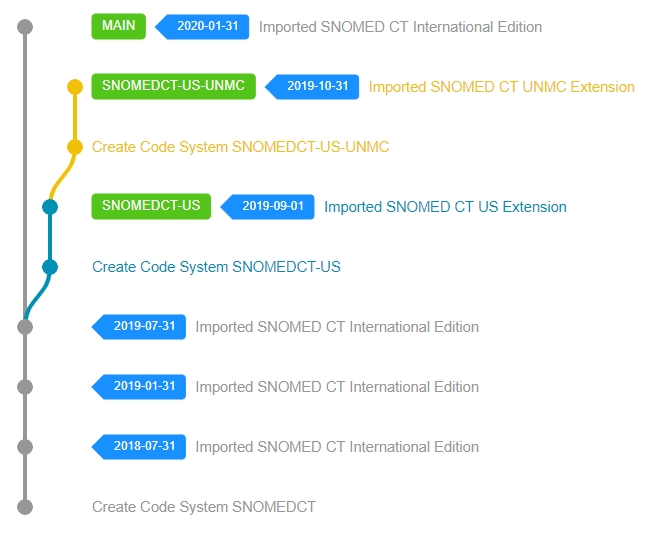

If your use case would be to import the SNOMED CT US Extension 2019-09-01 version into this repository, then ideally it would look like this:

The next section describes the use case scenarios in the world of SNOMED CT and the recommended approaches for deploying these scenarios in Snow Owl.

Ideally, Snow Owl should run alone on a server and use all of the resources available to it. To do so, you need to configure your operating system to allow the user running Snow Owl to access more resources than allowed by default.

The following settings must be considered before going to production:

Where to configure systems settings depends on which package you have used to install Snow Owl, and which operating system you are using.

When using the .zip or .tar.gz packages, system settings can be configured:

temporarily with , or

permanently in .

When using the RPM or Debian packages, most system settings are set in the system configuration file. However, systems that use systemd require that system limits are specified in a systemd configuration file.

On Linux systems, ulimit can be used to change resource limits temporarily. Limits usually need to be set as root before switching to the user that will run Snow Owl. For example, to set the number of open file handles (ulimit -n) to 65,536, you can do the following:

The new limit is only applied during the current session.

You can consult all currently applied limits with ulimit -a.

On Linux systems, persistent limits can be set for a particular user by editing the /etc/security/limits.conf file. To set the maximum number of open files for the snowowl user to 65,536, add the following line to the limits.conf file:

This change will only take effect the next time the snowowl user opens a new session.

Ubuntu ignores the limits.conf file for processes started by init.d. To enable the limits.conf file, edit /etc/pam.d/su

When using the RPM or Debian packages, system settings and environment variables can be specified in the system configuration file, which is located in:

However, for systems that use systemd, system limits need to be specified via systemd.

When using the RPM or Debian packages on systems that use systemd, system limits must be specified via systemd.

The systemd service file (/usr/lib/systemd/system/snowowl.service) contains the limits that are applied by default.

To override them, add a file called /etc/systemd/system/snowowl.service.d/override.conf (alternatively, you may run sudo systemctl edit snowowl which opens the file automatically inside your default editor). Set any changes in this file, such as:

Once finished, run the following command to reload units:

The technology stack behind the Terminology Server consists of the following components:

The Terminology Server application

Elasticsearch as the data layer

Optional: Authentication/Authorization service

Either an OpenID Connect/OAuth2.0 compatible external service with JSON Web Token support

Or an LDAP-compliant directory service

Optional: A reverse proxy handling the requests towards the REST API

Outgoing communication from the Terminology Server goes via:

HTTP(s) towards Elasticsearch and to the external OpenID Connect/OAuth2 authorization server

LDAP(s) towards the A&A service

Incoming communication is handled through the HTTP port 8080.

A selected reverse proxy channels all incoming traffic through to the Terminology Server.

Elasticsearch versions supported by each major version of Snow Owl:

The Elasticsearch cluster can either be:

a co-located, single-node, self-hosted cluster

a managed Elasticsearch cluster hosted by

Having a co-located Elasticsearch service next to the Terminology Server directly impacts the hardware requirements. See our list of recommended hardware on the .

For authorization and authentication, the application supports external OpenID Connect/OAuth2 compatible authorization services (eg. Auth0) and any traditional LDAP Directory Servers. We recommend starting with and evolving to other solutions later because it is easy to set up and maintain while keeping Snow Owl's user data isolated from any other A&A services.

A reverse proxy, such as is recommended to be utilized between the Terminology Server and either the intranet or the internet. This will increase security and help with channeling REST API requests appropriately.

With a preconfigured domain name and DNS record, the default installation package can take care of requesting and maintaining the necessary certificates for secure HTTP. See the details of this in the Configuration section.

To simplify integration and enable interoperability with third-party systems, Snow Owl TS offers two forms of accessing terminology resources via HTTP requests:

An API compliant with the requirements listed in the FHIR R5 specification for terminology services: https://hl7.org/fhir/R5/terminology-service.html

A native API that is customized to match Snow Owl's internal representation of its supported resources, and so can provide more options

The following pages provide additional information on each method of access:

A comprehensive set of examples is available in our Postman collection:

Port 8080 and the context path /snowowl is assigned in the default installation for serving both APIs. Navigate to http(s)://<host>:8080/snowowl/ to visit the built-in "interactive playground" which lists all available requests by category in the dropdown on the top left:

Once valid user credentials are entered on the "Authentication" page reachable from the sidebar, it becomes possible to send requests to the server and inspect the returned response body as well as any relevant headers:

Select a request from the sidebar so that its documentation page appears in the main area. Each request is accompanied by a short description and the list of parameters it accepts. Fields marked with a * symbol are required.

Press the Try button after populating the input fields to execute the request:

A typical extension scenario is the development of the extension itself. Whether you are starting your extension from scratch or already have a well-developed version that you need to maintain, the first choice you need to make is to identify the dependencies of your SNOMED CT Extension.

If your Extension extends the SNOMED CT International Edition directly, then you need to pick one of the available International Edition versions:

If you are starting from scratch, it is always recommended to select the latest International Release as the starting point of your Extension.

If you have an existing Extension then you probably already know the International Release version your Extension depends on.

When you have identified the version you need to depend on then you need to import that version (or a later release packages that also includes that version in its FULL RF2 package) first into Snow Owl. Make sure that the createVersion feature of the RF2 import process is enabled, so it will automatically create the versions for each imported RF2 effectiveTime value.

After you have successfully imported all dependencies into Snow Owl, the next step is to create a Code System that represents your SNOMED CT Extension (see ). When creating the Code System, besides specifying the namespace and optional modules and languages, you need to enter a Code System shortName, which will serve as the unique identifier of your Extension and select the extensionOf value, which represents the dependency of the Code System.

After you have successfully created the Code System representing your Extension, you can import any existing content from a most recent release or start from scratch by creating the module concept of your extension.

#

If your Extension needs to extend another Extension and not the International Edition itself, then you need to identify the version you'd like to depend on in that Extension (that indirectly will select the International Edition dependency as well). When you have identified all required versions, then starting from the International Edition recursively traverse back and repeat the RF2 Import and Code System creation steps described in the previous section until you have finally imported your extension. In the end your extension might look like this, depending on how many Extensions you are depending on.

Setting up a Snow Owl deployment like this is not an easy task. It requires a thorough understanding of each SNOMED CT Extension you'd like to import and their dependencies as well. However, after the initial setup, the maintenance of your Extension becomes straightforward, thanks to the clear distinction from the International Edition and from its other dependencies. The release process is easier and you can choose to publish your Extension as an extension only release, or as an Edition or both (see ). Additionally, when a new version is available in one of the dependencies, you will be able to upgrade your Extension with the help of automated validation rules and upgrade processes (see ). From the distribution perspective, this scenario shines when you need to maintain multiple Extensions/Editions in a single deployment.

Pros:

Excellent for authoring and maintenance

Good for distribution

Cons:

Harder to set up the initial deployment

Here is the list of files and folders extracted from the release package and their role are described below.

Contains every configuration file used for the docker stack, including docker-compose.yml.

In Docker, this directory serves as the context, implying that when executing commands, one needs to either explicitly reference the configuration file or run docker compose commands directly within this directory.

E.g. to verify the status of the stack there are two approaches:

Execute the command inside ./snow-owl/docker

Using the custom backup container it is possible to restore:

the Elasticsearch indices

the OpenLDAP database (if present)

To restore any of the data the following steps have to be performed:

Now that SNOMED CT content is present in the code system (identified by the unique id SNOMEDCT) it is time to take a deeper dive. A frequent interaction is to retrieve properties of a concept identified by its . To do so, execute the following command:

The response should look something like this:

We used the expand query parameter to include the concept's Preferred Term (PT) in the response. The concept in question is the root concept of the SNOMED CT hierarchy.

Snow Owl security features enable you to easily secure your terminology server. You can password-protect your data as well as implement more advanced security measures such as role-based access control and auditing.

By default, Snow Owl comes without any security features enabled and all read and write operations are unprotected. To configure a security realm, you can choose from the following built-in identity providers: